Understanding dark Web Search is essential for any organization that cares about digital risk, brand safety, or data leakage. In this guide, I’ll explain what people mean by the dark web, how legitimate discovery and monitoring happen, the real risks involved, and practical, non-actionable ways organizations protect themselves. This professional, evidence-based primer is designed for security teams, decision makers, and informed readers not a how to.

What people actually mean by dark web

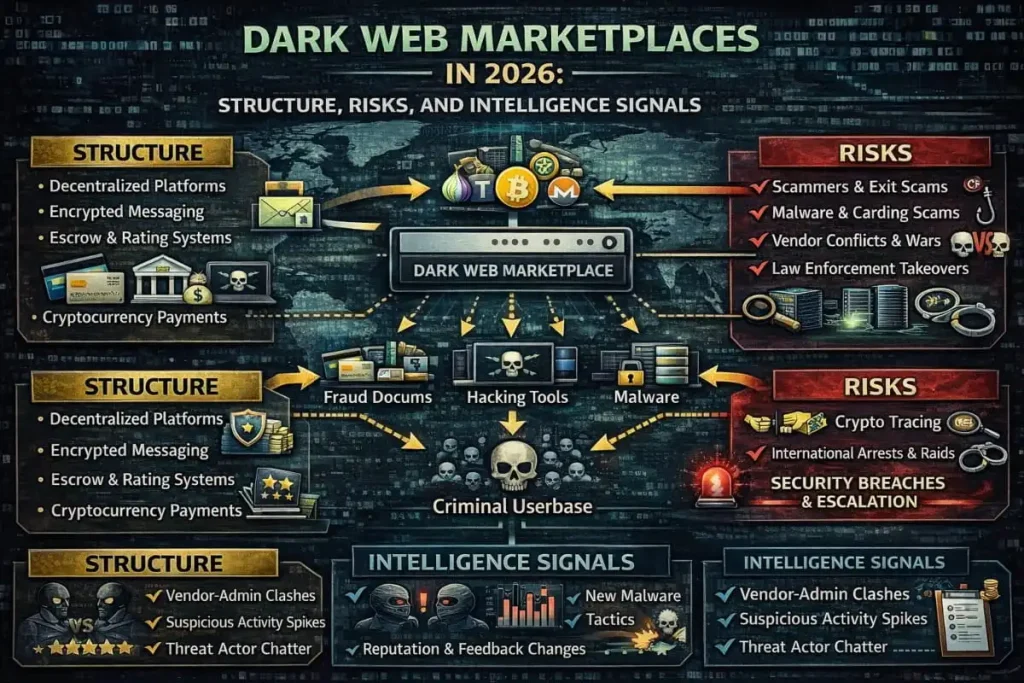

When people say the dark web, they usually refer to hidden parts of the internet that standard search engines don’t index. These areas host everything from private forums and whistleblower platforms to criminal marketplaces and abandoned services. A dark Web Search attempts to find mentions of an organization, exposed credentials, or leaked data inside those spaces, but the term covers a spectrum of approaches, not just one method.

Surface, deep, and dark a quick framing

- Surface web indexed pages you can find through Google or Bing.

- Deep web legitimately private content (databases, private dashboards) that search engines don’t crawl.

- Dark web intentionally hidden networks and services that require notable clients or addresses to reach.

This framing helps teams define goals: are you looking for leaked credentials, references to your brand, or threats to customers? The intended outcome drives whether you need a periodic scan, continuous monitoring, or API-driven alerts.

Why organizations invest in dark web discovery

Companies don’t monitor the dark web out of curiosity they do it to reduce risk. The main drivers are:

- Early detection of credential leaks that could lead to account takeover.

- Brand protection blocking fraudulent resellers or impersonators.

- Intelligence for incident response understanding the scope of a breach.

- Regulatory and customer obligations to detect and remediate exposed personal data.

When done correctly, a Darkweb Search program helps security teams convert noise into action: triaging findings, validating threats, and prioritizing remediation.

How legitimate dark web discovery works (high-level only)

Important: This high-level overview of digital risk protection focuses on safety and process. It does not provide step-by-step instructions for accessing hidden networks.

Legitimate discovery programs combine three broad components:

- Collection authorized, ethical crawling and feeds from trusted sources that index public portions of hidden networks and forums.

- Enrichment linking scraped text to internal records (e.g., mapping a found username to an employee account).

- Alerting and response prioritizing verified, high-risk findings and feeding them to SOC/IR workflows.

This model scales from manual investigations by expert analysts to automated solutions that feed continuous alerts into SIEMs or ticketing systems.

Key capabilities to evaluate (and why they matter)

When you compare options internal tools, enterprise platforms, or third-party services look for capabilities that reduce false positives and give actionable context:

- Signal quality: Are sources curated and vetted?

- Enrichment: Does the tool map findings to company assets or identity graphs?

- Speed of detection: Is monitoring continuous or episodic?

- Safety & compliance: Does the vendor avoid illegal access and adhere to privacy and data-handling laws?

- Integration: Can findings feed your SOC, ticketing system, or developer workflows?

A quality program focuses on risk reduction and legal compliance, not harvesting raw lists of sensitive information.

Comparing monitoring approaches

| Capability / Model | DIY Investigations | Managed Dark Web Monitoring | API / Platform Integration |

|---|---|---|---|

| Coverage | Limited to analyst effort | Broad — curated sources | Customizable via feeds |

| Speed | Slow (ad hoc) | Continuous, quicker alerts | Real-time via integration |

| Signal quality | Highly variable | High (vendor vetted) | Depends on provider |

| Safety / Legal risk | Higher if poorly handled | Vendor assumes risk controls | Vendor + customer share responsibility |

| Cost | Low tooling cost, high labor | Predictable subscription | Flexible, scales with usage |

| Best for | Small teams, investigations | Security-first orgs | Devops, automated workflows |

| Relevant offering example | Internal triage | Dark Web Monitoring | Darkweb data API integration |

Note: The table is conceptual vendors differ in defining coverage and safety. If you need a side by side vendor shortlist for Dexpose, I can create one tailored to your stack.

Common legitimate use cases (with examples)

- Credential leak detection finding exposed email/password combos tied to employees and triggering an Email data breach scan to check for risk.

- Data leak discovery identifying exposed PII or internal documents and prioritizing takedown requests.

- Threat actor tracking aggregating chatter about targeted attacks or extortion attempts to inform defensive posture.

- Brand abuse monitoring spotting counterfeit offers or impersonation that could harm customers.

To improve context and response, these use cases often combine dark web signals with other datasets, such as Dexpose, open source intelligence (OSINT), social media intelligence, and internal telemetry.

What good detection doesn’t look like

Harmful programs either produce noise or cross ethical/legal lines. Warning signs include:

- Mass scraping with no vetting (high false-positive rates).

- Publishing raw lists of leaked credentials to teams without context.

- Selling access to illicit forums or data.

- Over reliance on single-source searches that miss buried threats.

A defensible program emphasizes verification, enrichment, and a documented chain of evidence for each meaningful finding.

How findings are validated and prioritized

Not every hit is actionable. A good process includes:

- Source validation: Is the source reasonable? Is the claim new or recycled?

- Identity linking: Does the exposed identifier map to a real employee, customer, or system?

- Risk scoring: How likely is the exposure to be exploited? What assets are in play?

- Triage workflow: High risk hits generate IR tickets low risk items get archived for trend analysis.

This triage reduces analyst fatigue and gives security teams clear decisions to remediate or monitor.

Integrations that make dark-web intelligence operational

Intelligence is most valuable when it plugs into your workflows:

- SIEM / SOAR ingestion for automated playbooks.

- Ticketing systems for incident tracking and remediation.

- Identity platforms to map alarms to known personnel.

- Custom APIs that deliver structured results into engineering pipelines an example of Darkweb data API integration that powers automated blocking or password resets.

Integrations convert signal into action and cut mean time to contain.

Tools vs services: where each wins

- Tools and APIs: These are great for teams with detection pipelines that want to ingest structured feed data. (Think cost-efficient scale-ups using Darkweb data API integration.)

- Managed services are best for organizations that need curated intelligence without building an internal practice. They typically offer higher signal quality, SLAs, and analyst validation.

- Scanner products: Some vendors advertise a quick Dark Web scan service or a Free Dark Web Report as a first look; these are useful for baseline discovery but usually lack continuous monitoring.

A blended approach often makes sense: start with a report to scope exposure, then shift to continuous monitoring or an API-driven model for production grade coverage.

Safety and legal considerations

Searching hidden networks presents legal and reputational crises. Organizations must:

- Follow applicable laws and regulations where they operate. Many countries treat unauthorized access or data trafficking as criminal offenses.

- Maintain documented policies for handling found personal data to meet privacy obligations.

- Avoid activities construed as facilitating criminal marketplaces or engaging with prohibited content.

In regulated jurisdictions, vendor contracts should include compliance attestations and clear boundaries on acceptable collection methods.

Practical governance checklist (quick)

- Maintain a written dark web monitoring policy.

- Vendor proof of legal collection and takedown processes is required.

- Ensure findings are encrypted in transit and at rest.

- Assign data owners for remediation (identity, legal, PR).

This governance reduces legal risk and makes intelligence usable across the business.

Measuring ROI what success looks like

Security leaders often look for measurable outcomes:

- Reduced time to detect exposed credentials.

- The number of incidents was averted due to timely takedowns or password resets.

- Reduction in successful phishing or account compromise events tied to detected leaks.

- Regulatory compliance metrics (e.g., number of consumer notifications prevented or performed correctly).

The program pays for itself when dark web discovery maps to improved containment and fewer incidents.

Common misconceptions about dark web discovery

- If it’s on the dark web, it’s immediately usable. Not always many posts are scams, recycled, or unverifiable.

- DIY is cheaper and just as good. Not if your analysts spend hours validating low-quality hits.

- Google can find the dark web. Search engines index the surface web; hidden services are not discoverable through typical, deep, or dark web searches.

A pragmatic approach emphasizes signal quality over noisy breadth.

How Dexpose (or your security team) should approach a pilot

If you’re planning a pilot, a staged approach works best:

- Baseline: Run a Free Dark Web Report or targeted scan to identify immediate exposures.

- Validate: Have analysts verify high-risk findings and map them to assets.

- Integrate: Feed validated alerts into your SOC via Darkweb data API integration or a managed channel.

- Scale: Shift to continuous Dark Web Monitoring and measure outcomes.

Pilots help set realistic expectations about signal volume and remediation workload.

Technology trends shaping dark-web discovery.

- Better entity matching: Identity graphs reduce false positives by linking aliases to known employees.

- Automated enrichment: Combining leaked content with internal asset inventories speeds triage.

- API-first vendors: Platforms offering clean integration points enable automated remediation workflows.

- Cross-signal fusion: Combining dark web signals with phishing telemetry and Social Media Intelligence produces higher-confidence alerts.

Adopting modern tooling turns otherwise noisy data into an operational advantage.

Risk reduction best practices (bulleted quick wins)

- Rotate and enforce unique credentials across critical systems.

- Run regular Email data breach scan checks and force resets on impacted accounts.

- Approve vendors only with documented legal collection practices.

- Prioritize high value asset protection and automate remediation where possible.

These straightforward steps lower the surface area attackers can exploit if a leak appears.

When to escalate findings to legal, PR, or law enforcement

Escalate when evidence shows:

- Sensitive customer data has been exposed (PII, payment data).

- An extortion or credible threat is directed at your organization.

- There’s a linkage between the posted data and an active breach impacting systems.

Early, coordinated escalation dwindles reputational and legal fallout.

Selecting a vendor red flags and green flags

Green flags

- Transparent source curation and verification.

- Clear SLAs and integration options (APIs, SIEM connectors).

- References in your industry or proven case studies.

Red flags

- Promises of access to criminal marketplaces.

- Lack of legal compliance documentation.

- Over reliance on unsupervised scraping without context.

A vendor that prioritizes lawful collection and analyst validation typically delivers more reliable outcomes.

Implementation checklist for teams

- Define the scope (assets, email domains, high-value targets).

- Run an initial discovery (Free Dark Web Report) to assess exposure.

- Choose a model (managed monitoring, API integration, or hybrid).

- Connect alerts into living IR and identity workflows.

- Measure outcomes quarterly and refine sources.

Following a clear roadmap reduces false starts and ensures program maturity.

Closing responsible use and the role of continuous intelligence

Darkweb Search is not a silver bullet, but when implemented responsibly, it becomes a critical component of modern cyber defense. The goal is not to “catch everything” but to provide timely, verifiable signals that help teams contain incidents, protect customers, and make better decisions.

If your organization needs a practical next step, consider starting with a scoped Dark Web scan service or a Free Dark Web Report to identify immediate exposures. From there, it matures into endless Dark Web Monitoring with well-documented integrations and governance.

If you’d like, I can:

- Produce a tailored pilot plan for Dexpose with data flows and KPIs, or

- Draft a vendor comparison matrix that includes API integration options and compliance checks (including Oracle security scan readiness for enterprise environments).

Let me know which you prefer, and I’ll prepare a concise, implementation ready brief.

Frequently Asked Questions

No monitoring for mentions of your brand or leaked data is legal when done using authorized, ethical methods and vendor-provided feeds. Activities that access restricted systems without permission can be illegal. A: Monitoring alone cannot prevent breaches. However, it can detect exposed credentials or sensitive data early. This early visibility allows organizations to contain incidents and remediate risks faster. A: A free dark web report provides a one-time snapshot of potential exposures. Ongoing monitoring, on the other hand, offers ongoing coverage with alerts and reviewer validation. This ensures sustained risk reduction and faster response to new threats. Yes, many platforms provide APIs and connectors to streamline integration. Validated results can be fed directly into SIEMs and SOAR workflows. This integration helps security teams act quickly on high-risk exposures. High-risk leaks, such as sensitive credentials or personal data, require immediate action. Organizations should perform password resets, contain the breach, and follow coordinated incident response protocols. Prompt remediation reduces potential damage and risk exposure.Is a dark web search illegal?

Will dark web monitoring stop breaches?

What is the difference between a free report and continuous monitoring?

Q4: Can dark web findings be integrated into my SOC?

How quickly should we act on a verified leak?